Software Engineering¶

We now briefly discuss our software engineering practices that help us to ensure the

transparency, reliability, scalability, and extensibility of the respy package.

Program Design¶

We build on the design of the original authors (codes). We maintain a pure Python implementation with a focus on readability and a scalar and parallel Fortran implementation to address any performance constraints. We keep the structure of the Python and Fortran implementation aligned as much as possible. For example, we standardize the naming and interface design of the routines across versions.

Test Battery¶

We use pytest as our test runner. We broadly group our tests in four categories:

property-based testing

We create random model parameterizations and estimation requests and test for a valid return of the program. For example, we estimate the same model specification using the parallel and scalar implementations as both results need to be identical. Also, we maintain a an

f2pyinterface to ensure that core functions of our Python and Fortran implementation return the same results. Finally, we also upgraded the codes by Keane and Wolpin (1994) and can compare the results of therespypackage with their implementation for a restricted set of estimation requests that are valid for both programs.regression testing

We retain a set of 10,000 fixed model parameterizations and store their estimation results. This allows to ensure that a simple refactoring of the code or the addition of new features does not have any unintended consequences on the existing capabilities of the package.

scalability testing

We maintain a scalar and parallel Fortran implementation of the package, we regularly test the scalability of our code against the linear benchmark.

reliability testing

We conduct numerous Monte Carlo exercises to ensure that we can recover the true underlying parameterization with an estimation. Also by varying the tuning parameters of the estimation (e.g. random draws for integration) and the optimizers, we learn about their effect on estimation performance.

release testing

We thoroughly test new release candidates against previous releases. For minor and micro releases, user requests should yield identical results. For major releases, our goal is to ensure that the same is true for at least a subset of requests. If required, we will build supporting code infrastructure.

robustness testing

Numerical instabilities often only become apparent on real world data that is less well behaved than simulated data. To test the stability of our package we start thousands of estimation tasks on the NLSY dataset used by Keane and Wolpin. We use random start values for the parameter vector that can be far from the true values and make sure that the code can handle those cases.

Our tests and the testing infrastructure are available online. As new features are added and the code matures, we constantly expand our testing harness.

Continuous Integration Workflow¶

We set up a continuous integration workflow around our GitHub Organization. We use the continuous integration services provided by Travis CI. tox helps us to ensure the proper workings of the package for alternative Python implementations. Our build process is managed by Waf. We rely on Git as our version control system and follow the Gitflow Workflow. We use Github for our issue tracking. The package is distributed through PyPI which automatically updated from our development server.

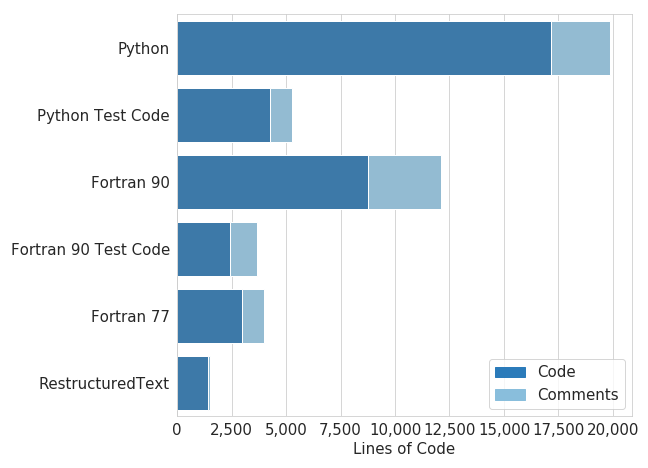

Lines of code¶