View and download the notebook here!

Scalability#

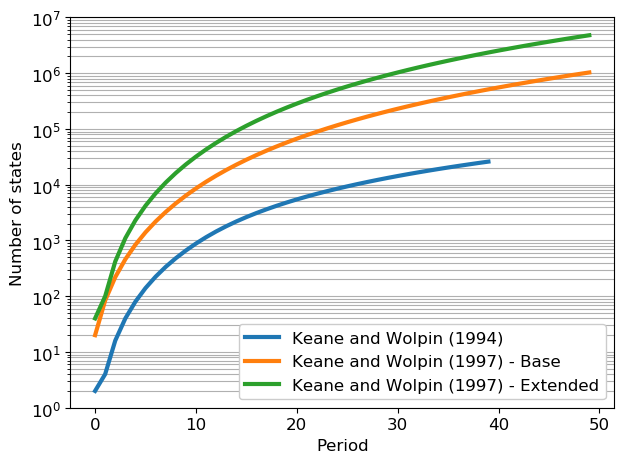

The solution and estimation of finite-horizon discrete choice dynamic programming model appears straightforward. However, it entails a considerable computational burden due to the well known curse of dimensionality (Bellman and Dreyfus, 1962). The figure below illustrates how the total number of states increases exponentially with each period. The size of the state space is shown for Keane and Wolpin (1994) (all models have the same state space) and the base and extended model of Keane and Wolpin (1997). The latter two models are different because the state space of the base parameterization does not include information on the previous activity which significantly reduces the complexity of the model. Note that the y-axis us log-scaled to compensate for the loss in readability due to exponential growth.

[6]:

fig

[6]:

In total there are 317,367 states in Keane and Wolpin (1994), 12,991,208 states in Keane and Wolpin (1997) - Base and 59,306,140 states in Keane and Wolpin (1997) - Extended.

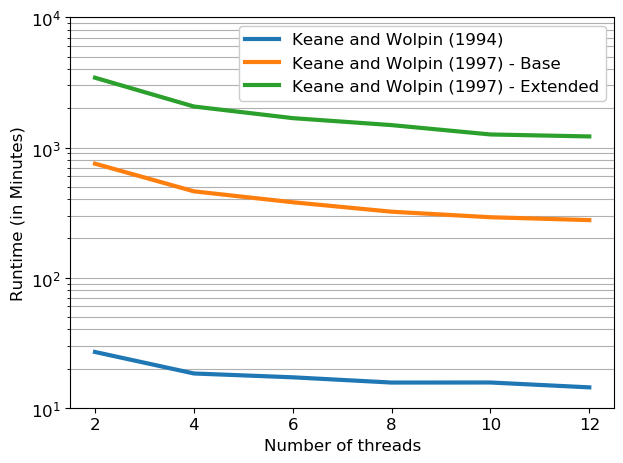

During an estimation, thousands of different candidate parameterizations of the model are appraised with respect to the sample likelihood. For each evaluation of the likelihood the \(n\)-dimensional integral of \(E\max\) (where \(n\) is the number of choices) needs to be approximated at all states. Below, we show the total computation time required for 1,000 evaluations of the criterion function as we increase the number of threads from two to twelve.

[10]:

fig

[10]:

Adding even more threads, however, does not lead to any further improvements.

For more details, see the script online or the complete Jupyter notebook in the repository under docs/software/scalability.ipynb.